5 Best AI Voice Cloning Tools for Games and Characters (2026)

Dec 19, 2025

A character’s voice does more than deliver dialogue. It sets rhythm, signals intent, and tells the player how to feel before the words even land. In games, that effect compounds over time. A voice that sounds off might be tolerable for a cutscene, but it quickly becomes distracting when it repeats across dozens of encounters or reacts awkwardly in live dialogue. For characters and AI companions and chatbots, a robotic voice immediately breaks immersion and loses retention.

Voice cloning has become a practical way to build and scale character audio without requiring studio sessions. Teams use it to prototype early, ship branching dialogue, localize characters, and experiment with AI-driven NPCs that speak on the fly. The difference between tools now isn’t whether they sound good in isolation, but whether they hold up inside a game engine, under real player behavior, and provide a complete immersive experience.

What Matters for Games and Character Voices

Game audio has different demands than narration or video:

-

Consistency across lines. Characters may speak thousands of times. The voice can’t drift.

-

Emotional range. Combat barks, calm dialogue, panic, sarcasm. One tone isn’t enough.

-

Low latency. For interactive dialogue or AI-driven NPCs, delay longer than a natural human speaker breaks the immersion.

-

Scalability. You need to be able to generate many lines of audio without manually regenerating and correcting each one.

-

Cloning quality. A character voice should stay recognizable even with short or imperfect source recordings.

If you’re building branching dialogue, live NPC agents, or story-heavy games, these factors matter more than a polished demo voice.

5 Top AI Voice Cloning Tools for Games (2026)

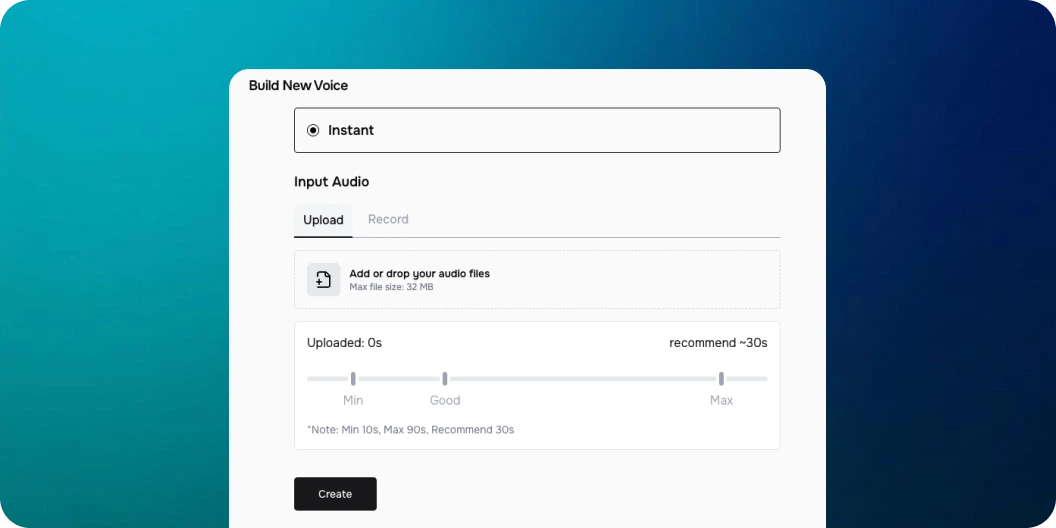

1. Fish Audio

Fish Audio is the strongest option for character voices right now. It handles expressive delivery without collapsing into monotone repetition, even across long sessions. Voice cloning works from short samples and stays stable across emotional shifts.

- Use cases: NPC dialogue, playable characters, AI-driven companions

- Strength: high emotional realism and strong voice identity

- Workflow: real-time streaming, batch generation, API and SDKs

Fish supports emotion control that lets you shape tone at the word level. This makes it perfect for games where the same character needs to whisper in one scene and shout in another without sounding like a different person. Latency at <500ms is low enough for interactive dialogue, which makes it practical for live NPCs instead of just pre-rendered lines.

2. ElevenLabs

ElevenLabs is widely used for character narration and cinematic dialogue.

- Use cases: cutscenes, scripted dialogue, narration-heavy games

- Strength: smooth delivery and large voice library

- Notes: emotional control is more limited, costs rise at scale

It works well for controlled environments like cutscenes, but can feel less flexible for reactive dialogue systems.

3. Cartesia

Cartesia is built with real-time generation in mind.

- Use cases: interactive NPCs, AI agents, fast dialogue systems

- Strength: very low latency

- Notes: voices can sound flatter in long or emotional scenes

If your game relies on live conversation rather than authored scripts, Cartesia’s speed is a real advantage.

4. Hume

Hume focuses on emotional expression rather than clean narration.

- Use cases: experimental games, character-driven storytelling

- Strength: strong emotional modulation

- Notes: less consistent across long sessions and can hallucinate phrasing

It’s useful for mood-heavy scenes, but not ideal for large dialogue trees where consistency matters.

5. Speechify

Speechify is simple and predictable, though less specialized for games.

- Use cases: placeholder dialogue, early prototyping

- Strength: clear and easy to generate

- Notes: limited character depth and control

It’s often used early in development before switching to a more expressive system.

Voice Cloning Tips for Game Characters

A few practices that consistently improve results:

- Record clean source audio. One speaker, minimal noise, stable volume. Even short clips work better when they’re controlled.

- Design emotional ranges per character. Decide what emotions a character uses and limit extremes. This keeps voices believable over time.

- Test in context. A line that sounds fine alone can feel wrong in gameplay. Always test inside the game engine.

- Spot-check often. Catch pronunciation drift or pacing issues early before generating thousands of lines.

Fish Audio’s cloning holds up well here. Its ability to maintain character identity while shifting emotion is why many teams use it beyond prototyping and into production.

Final Thoughts

Game audio workflows are changing. Dialogue is no longer a fixed asset recorded once and locked forever. Characters talk more, react more, and exist across updates, DLCs, and live systems. Voice tools have to keep up with that pace.

Some teams will still record key scenes in a studio and fill the rest with synthetic speech. Others will lean fully into generated voices for NPCs and companions. Either way, the tool needs to stay consistent, flexible, and fast once it’s wired into the engine.

For 2026, Fish Audio fits that role best. It gives developers enough control to shape characters without turning voice generation into a bottleneck. If you’re building characters players are meant to spend real time with, that reliability matters.