Audio Separation Full Guide and Review 2026

Jan 28, 2026

Audio separation has surged from a niche technical specialty into a plethora of modern creative workflows. In 2026, AI audio separation is no longer an experimental technology, it’s a widely used tool for musicians, producers, DJs, podcasters, and creators of all kinds. Whether you want to separate vocals and instruments, perform precision music track separation for remixes, or clean up dialogue in a noisy track, audio source separation tools are faster, smarter, and more accessible than ever.

This Audio Separation Full Guide and Review 2026 walks you through how audio demixing works, why it matters today, the most popular use cases, current limitations, and where the technology is headed next. If your goal is to separate music audio with accuracy using AI, this comprehensive guide has everything you need.

What Is Audio Separation (and Why Does It Matter)?

Audio separation, also known as audio source separation or audio demixing, is the process of taking a mixed audio file (like a stereo song) and isolating its individual components:

- Vocals

- Drums

- Bass

- Guitar

- Piano

- Synths

- Dialogue or speech

- Sound effects

Traditionally, once instruments and vocals were “baked” into a stereo file, separating them again was nearly impossible. Engineers had to rely on EQ tricks, phase tricks, or re-recording parts, all of which were time-consuming and imperfect. In contrast, AI audio separation now uses deep learning to recognize and extract individual sound elements with insane accuracy.

How AI Audio Separation Works

Today’s audio source separation systems are built on deep neural networks that learn how sound behaves over time, frequency, and dynamics.

Core Technologies Powering Audio Demixing

- Spectrogram Analysis: Audio is transformed into frequency-time visuals that help AI distinguish instruments and vocals.

- Neural Networks & Transformers: These architectures identify subtle differences between overlapping sounds, making it possible to reliably separate vocals and instruments.

- Masking Techniques: AI creates “masks” that isolate selected sounds while suppressing others.

- Contextual Learning: Modern models understand musical context—knowing, for example, what voices or guitar tones should sound like even in dense mixes.

Thanks to these advances, tools that perform music tracks separation are now faster, cleaner, and more studio-ready than ever.

Why Audio Separation Is a Big Deal in 2026

The rise of AI audio separation isn’t accidental. Several trends have converged to make this technology essential:

1. Creator Economy

Creators from platforms like TikTok or YouTube want clean audio. Being able to separate music audio means removing vocals for backing tracks, isolating music for educational content, or enhancing dialogue in videos.

2. Music Production & Remix Culture

Producers and DJs use audio source separation to:

- Create remixes

- Extract acapellas

- Rework old demos

- Build new beats from isolated stems

3. Music Education and Learning

Musicians use tools that separate vocals and instruments to:

- Practice with backing tracks

- Analyze arrangements

- Study specific parts

4. Restoration and Archiving

Archivists and audio engineers use audio demixing to restore old recordings, isolate speeches, or clean up mixed material for preservation or re-release.

5. Media Production

Film, TV, and podcast producers now rely on AI audio separation to isolate dialogue from background sound when original multitracks aren’t available.

Types of Audio Separation Tools in 2026

Not all separation tools are the same. The most common categories include:

✔ Vocal and Instrument Separation The simplest and most widespread form of audio source separation, letting you isolate or mute vocals while keeping the music. ✔ Stem Separation More advanced tools break a track into multiple stems, such as:

- Vocals

- Drums

- Bass

- Other instruments

This type of music tracks separation is essential for professional remixing and production workflows. ✔ Dialogue vs Background Separation Widely used in film and podcast editing to isolate voices from music and sound effects. ✔ Genre-Specific Models Some AI models are optimized for specific genres such as pop, hip-hop, rock, or classical, improving separation accuracy for those styles.

Best Use Cases for Audio Separation

🎧 Music Production Producers use audio demixing to extract vocals, reconstruct beats, and create entirely new versions of existing tracks.

🎛 DJing & Live Performance DJs rely on music tracks separation to isolate vocals for live mashups, create instrumental breaks, or build custom transitions.

📱 Content Creation Creators can:

- Remove copyrighted vocals

- Isolate background music

- Improve dialogue clarity in videos

📚 Music Education Teachers and students use separate vocals and instruments to slow down tracks, focus on parts, and analyze techniques.

🔊 Accessibility & Broadcast Isolated speech tracks deliver clearer audio for hearing-impaired listeners and cleaner post-mix dialogue for broadcast.

Strengths and Limitations

✔ Strengths

- Rapid turnaround and processing

- Cleaner vocal and instrument extraction

- Better handling of overlapping frequencies

- User-friendly interfaces for beginners

✖ Limitations

- Artifacts in extremely dense mixes

- Reverb and effects can blur sources

- Complex orchestral or layered recordings still challenge separation models

Despite the challenges, audio source separation tools in 2026 are significantly more capable and reliable than early alternatives.

Audio Separation Workflow (User-Friendly in 2026)

A typical workflow today might look like:

- Upload an audio file

- Choose separation type (vocal/instrumental, stems, dialogue)

- Let the AI process the audio

- Preview the separated tracks

- Export stems for remixing or editing

This simplified experience has made separate music audio operations easy even for beginners.

The Future of Audio Separation- Fish Audio

Looking ahead, the future of AI audio separation includes:

- Real-time separation during live streams and performances

- Personalized AI models tuned to specific voices or instruments

- Multimodal systems that integrate video and metadata for better accuracy

- Ethical safeguards for copyright and consent

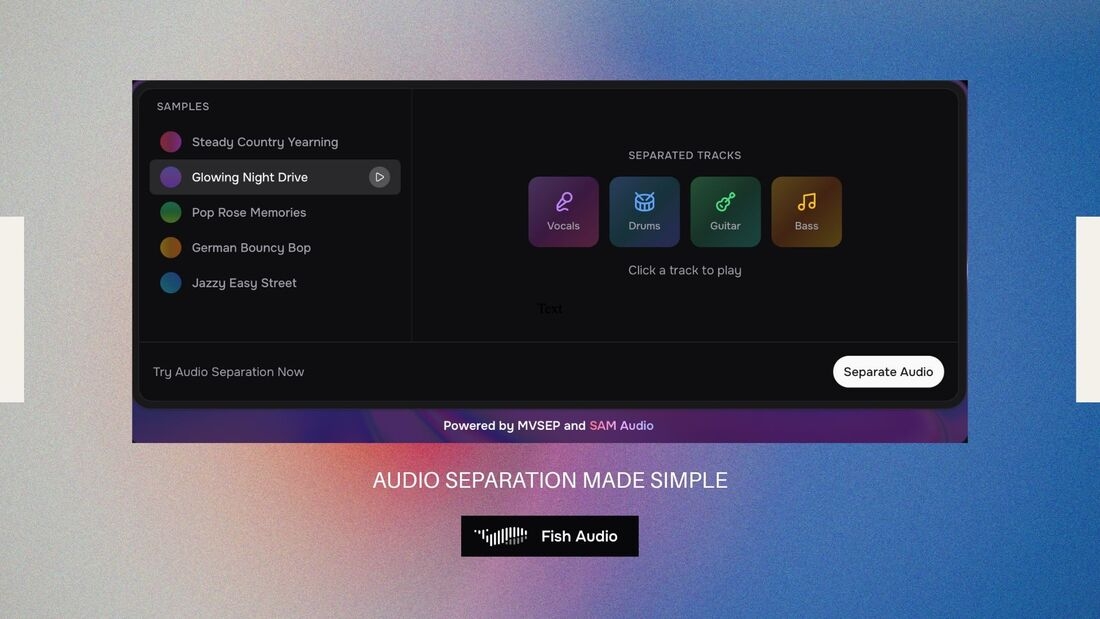

Fish Audio has one of the most accessible audio separation tools you can try today

Audio separation tools like Fish Audio & AI audio demixing is rapidly becoming a standard tool in every sound professional’s toolkit.

Final Verdict: Audio Separation in 2026

Whether you’re a producer remixing tracks, a podcaster cleaning dialogue, a DJ prepping a set, or a teacher showing students how a song is built, the ability to separate vocals and instruments, perform accurate music tracks separation, and harness intelligent audio source separation workflows has reshaped how we interact with sound.

From mainstream tools to cutting-edge research (like AI models that learn from massive audio datasets), audio demixing is now a fundamental skill for anyone working with audio…and this is just the beginning.