How AI Audio Separation Works

Feb 6, 2026

AI audio separation models have fundamentally changed how so many media professionals, creators, and researchers understand and manipulate sound, making it possible to isolate speech, music, and background noise from a single recording with remarkable accuracy. To truly understand the power of this technology, it’s important to learn how audio separation works, what happens behind the scenes, and why modern AI-driven approaches outperform traditional methods by such a wide margin.

In this article, we’ll explore how audio separation works from both a conceptual and technical perspective, explain the evolution of AI audio separation models, and examine how real-world tools like Fish Audio and SAM audio put these ideas into practice.

What Is Audio Separation?

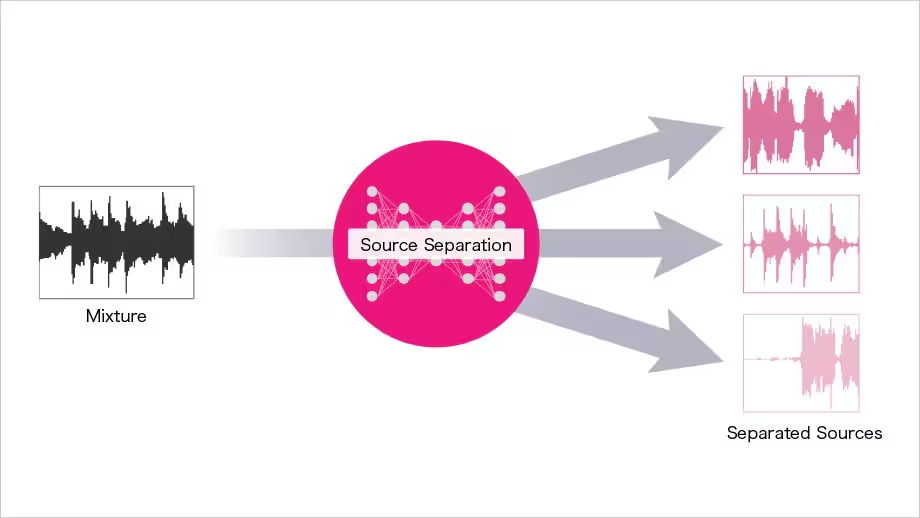

Audio separation is the process of breaking a mixed audio signal into its individual components. These components may include speech, music, ambient noise, sound effects, or even individual speakers. Understanding how audio separation works begins with recognizing that most recordings are mixtures, not isolated sound sources.

Historically, separating these components required manual editing, frequency filtering, or expensive studio-grade tools. Today, AI audio separation models can analyze a single waveform and predict which parts belong to which sound source, all in seconds.

At its core, how audio separation works depends on identifying patterns within sound—patterns that distinguish a human voice from traffic noise or a musical instrument from background ambience.

Why Audio Separation Is Hard

To understand why AI audio separation models are so valuable, you first need to understand why the problem itself is difficult. Sound sources overlap heavily in time and frequency. Two people speaking at once often occupy similar frequency ranges, making it extremely challenging to isolate one voice using traditional techniques.

Other complications include:

-

Reverberation and echo

-

Low-quality recordings

-

Dynamic background noise

-

Compression artifacts

-

Overlapping speech and music

Classic signal processing approaches struggle here, which is why learning how audio separation works in an AI context is so important.

Traditional Audio Separation Methods

Before AI audio separation models became widespread, engineers relied on rule-based approaches. These included:

Frequency Filtering

This method removes or reduces certain frequency bands. While effective for simple noise removal, it fails when speech and noise overlap in frequency.

Phase Cancellation

Used in stereo recordings, phase cancellation can isolate sounds positioned in specific spatial locations. However, it only works under very controlled conditions.

Manual Editing

Audio engineers often manually cut, mute, or attenuate parts of a waveform. This process is time-consuming and impractical for large-scale workflows.

These limitations laid the groundwork for modern AI-driven solutions and pushed researchers to rethink how audio separation works at a fundamental level.

The Rise of AI Audio Separation Models

AI audio separation models use machine learning to learn patterns from massive datasets of mixed and isolated audio. Instead of following fixed rules, these models learn how different sound sources behave statistically.

By training on thousands or even millions of examples, AI systems learn:

-

What speech typically sounds like

-

How music differs from dialogue

-

How background noise behaves over time

-

How overlapping sounds interact

This learning-based approach is the key reason AI audio separation models perform so well across a wide range of real-world scenarios.

How Audio Separation Works in Machine Learning

To understand how audio separation works in AI systems, it helps to break the process into stages.

1. Audio Representation

Raw audio is first converted into a representation the model can understand, commonly a spectrogram. A spectrogram shows how frequency content changes over time, making patterns easier to detect.

This step is essential because AI audio separation models rely on visual-like pattern recognition applied to sound.

2. Feature Extraction

The model extracts meaningful features from the spectrogram, such as harmonic structures, temporal rhythms, and frequency contours. These features help the system distinguish between speech, music, and noise.

Understanding how audio separation works at this stage explains why AI can outperform simple filters, it’s not just removing frequencies, it’s recognizing sound identities.

3. Source Estimation

The model predicts which parts of the audio belong to each source. This can involve estimating masks that “keep” speech while suppressing other sounds.

Modern AI audio separation models often use deep neural networks such as convolutional neural networks (CNNs) or transformers to perform this task.

4. Reconstruction

Finally, the separated components are reconstructed back into time-domain audio signals. The result is multiple clean tracks derived from a single mixed input.

Types of AI Audio Separation Models

There are several categories of AI audio separation models, each designed for specific use cases.

Speech vs. Noise Separation

These models focus on isolating human speech from environmental noise. They are commonly used in call enhancement, transcription, and accessibility tools.

Music Source Separation

Music-focused models separate vocals, drums, bass, and instruments. While not speech-centric, they demonstrate how audio separation works across different domains.

Speaker Separation (Diarization)

These models isolate individual speakers from a single recording. This is particularly valuable in interviews, meetings, and podcasts.

Universal Models

Some modern AI audio separation models aim to handle all of the above tasks using a single architecture, adapting dynamically to different audio types.

Training Data: The Hidden Engine

A crucial but often overlooked part of how audio separation works is the training data. AI models require massive datasets of mixed audio paired with clean reference tracks.

These datasets allow the model to learn subtle distinctions, such as:

-

Breath sounds vs. background hiss

-

Vocal harmonics vs. musical instruments

-

Echoed speech vs. ambient noise

The quality and diversity of training data largely determine how well AI audio separation models perform in real-world conditions.

Real-World Tools Using AI Audio Separation

Many modern tools put these concepts into action. Platforms like Fish Audio and SAM audio leverage AI audio separation models to deliver user-friendly solutions without requiring technical expertise.

Fish Audio, for example, allows users to upload a file and automatically separate speech from background noise or overlapping voices. SAM audio similarly applies advanced models to handle complex audio scenarios, making professional-grade separation accessible to everyday users.

These tools demonstrate how audio separation works in practice, not as an abstract theory, but as a reliable production workflow.

Accuracy vs. Artifacts

No discussion of AI audio separation models is complete without addressing artifacts. Artifacts are unwanted distortions or residual sounds left behind after separation.

Common artifacts include:

-

Metallic or robotic speech tones

-

Residual background noise

-

Sudden volume fluctuations

Understanding how audio separation works helps users minimize these issues by using clean source audio, adjusting model parameters, and combining AI separation with manual editing.

Computational Considerations

AI audio separation models can be computationally intensive. Larger models offer better accuracy but require more processing power.

Cloud-based tools offload this burden to remote servers, while local tools require powerful CPUs or GPUs. This tradeoff explains why some users prefer online platforms like Fish Audio, while others choose offline open-source solutions.

How Audio Separation Works for Transcription

One of the most impactful applications of AI audio separation models is transcription. Clean audio leads to higher transcription accuracy, especially in recordings with multiple speakers or background noise.

By separating speech first, transcription engines receive a clearer signal, reducing word errors and speaker confusion. This workflow highlights how audio separation works as a foundational step rather than a standalone feature.

Limitations of AI Audio Separation Models

Despite their power, AI audio separation models are not perfect. Limitations include:

-

Difficulty with extremely overlapping speech

-

Reduced performance on unseen sound types

-

Dependency on training data diversity

Understanding how audio separation works realistically helps set proper expectations and encourages hybrid workflows that combine AI with human oversight.

The Future of AI Audio Separation

The future of AI audio separation models lies in adaptability and multimodal learning. Researchers are exploring systems that combine audio with visual cues, text context, and speaker identity.

As models grow more efficient, real-time separation will become standard in communication tools, video conferencing platforms, and live broadcasting.

Advances in self-supervised learning may also reduce the need for labeled datasets, further improving how audio separation works across languages and environments.

Best Practices for Using Audio Separation Tools

To get the most out of AI audio separation models, consider these best practices:

-

Record the cleanest audio possible

-

Use separation as a staged process

-

Combine AI output with manual refinement

-

Always keep original recordings

These steps help ensure that how audio separation works in theory translates into usable, professional-quality results.

Conclusion

AI-driven sound processing has reached a point where complex tasks once used mainly for specialists are now accessible to everyone, and understanding how audio separation works reveals why this shift is so transformative. From neural networks and spectrogram analysis to real-world tools like Fish Audio and SAM audio, the technology behind audio separation continues to evolve rapidly. As these systems become more accurate, efficient, and widely available, AI audio separation models will remain at the core of how we clean, analyze, and enhance sound in the modern digital world.