5 Best Real-Time Voice Cloning APIs for 2026

Dec 20, 2025

Real-time voice generation is a crucial component for many applications being made in 2026, from conversational chatbots to AI companions or customer support agents. Once speech is generated live instead of generated and delivered asynchronously, there are new factors to consider for the quality of the speech generation API. Latency becomes important, while every flaw becomes immediately obvious and audible to the listener. Delays feel awkward and flat delivery feels fake. A voice that drifts or glitches breaks trust immediately. This is especially true for AI agents, live NPCs, voice assistants, customer support bots, and anything that speaks back while a human is waiting.

In 2026, real-time voice cloning is a robust feature that can provide realism and engagement to many developers. Teams expect low latency, stable voice identity, and enough control to make speech sound intentional. The APIs below are the ones that provide the best experience for your users when pushed to a production environment.

What Matters for Real-Time Voice Cloning

Real-time voice has stricter requirements than batch text-to-speech:

Latency. Anything above a short pause feels unnatural in conversation.

Voice stability. The cloned voice must stay recognizable across different emotions and sentence lengths.

Streaming control. You need partial outputs, interruptions, and smooth transitions, not just full audio files.

Scalability. Real-time systems spike. APIs have to be dependable when traffic jumps.

If you’re building live agents, conversational NPCs, or call-based systems, these factors matter more than raw audio polish.

Top Real-Time Voice Cloning APIs (2026)

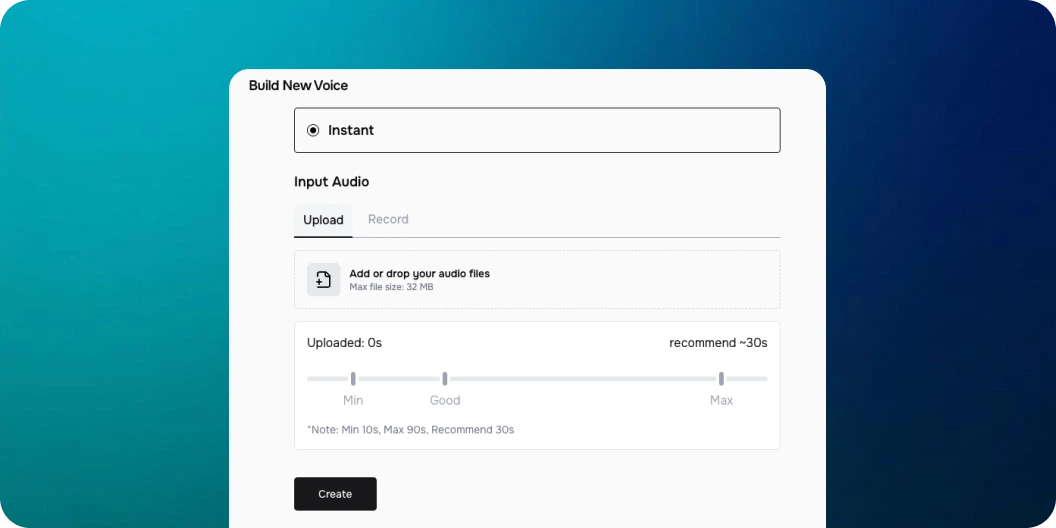

1. Fish Audio

Fish Audio is the strongest real-time voice cloning API available right now. It combines low-latency streaming with expressive delivery that doesn’t collapse under live conditions. Voice cloning works from short samples and stays consistent even when emotions shift mid-conversation.

- Use cases: AI agents, live NPCs, voice companions, real-time apps

- Strength: expressive realism with stable voice identity

- API: real-time streaming, batch generation, SDKs

Fish supports emotion control at generation time, which lets developers shape tone instead of baking everything into static prompts. Latency at <500ms is perfect for conversations that feel natural. This makes it viable not just for demos, but for production systems that users talk to daily.

2. ElevenLabs

ElevenLabs offers real-time capabilities alongside its batch generation tools.

- Use cases: live narration, conversational agents

- Strength: clean output and a broad voice library

- Notes: emotional steering is more limited and costs rise quickly at scale

It works well for predictable dialogue, but less so when speech needs to react dynamically to user behavior.

3. Cartesia

Cartesia is built specifically with low-latency speech in mind.

- Use cases: fast-response agents, interactive systems

- Strength: very low latency

- Notes: emotional depth is more limited than Fish

If speed is your top priority and tone is secondary, Cartesia is easy to wire into live pipelines.

4. Hume

Hume emphasizes emotional modulation over raw stability.

- Use cases: expressive conversational agents, experimental interfaces

- Strength: strong emotional variation

- Notes: less consistent over long live sessions and can hallucinate phrasing

It can add texture to short interactions, but needs careful guardrails in production.

5. Speechify

Speechify supports real-time use cases in a limited capacity.

- Use cases: simple live read-outs, accessibility tools

- Strength: clear and predictable speech

- Notes: minimal control for live conversational systems

It’s better suited for read-aloud scenarios than full conversational agents.

Practical Tips for Real-Time Voice Systems

A few lessons that come up quickly in live deployments:

- Test latency end-to-end. Network, model, and playback all add up.

- Limit emotional extremes. Over-steering emotion causes instability in live speech.

- Design interruption handling. Users talk over agents. Your voice system should handle it.

- Monitor drift. Spot-check voice identity over long sessions and regenerate speech when needed.

Fish Audio performs well under these conditions because its real-time pipeline is designed for continuous use rather than one-off clips.

Final Thoughts

Real-time voice cloning has additional requirements in addition to basic AI TTS platforms. Systems that sound fine asynchronously can suffer in performance when speech needs to respond instantly and consistently. That’s why API design, streaming behavior, and emotional control matter more than a flashy demo.

In 2026, Fish Audio stands out as the most balanced real-time voice cloning solution. It delivers expressive, stable speech without forcing developers to trade realism for speed.

If your product depends on live conversation, that balance is the difference between something people try once and something they actually use.